Autocompleted Intelligence

Intelligence isn't inherent to ChatGPT, it is the ghost in the machine.

If you follow the headlines, you see a lot of stories about AI these days. I would know, I have clicked on all of them. Maybe even a few that were actually about someone named Al, if it is a sans-serif font.1

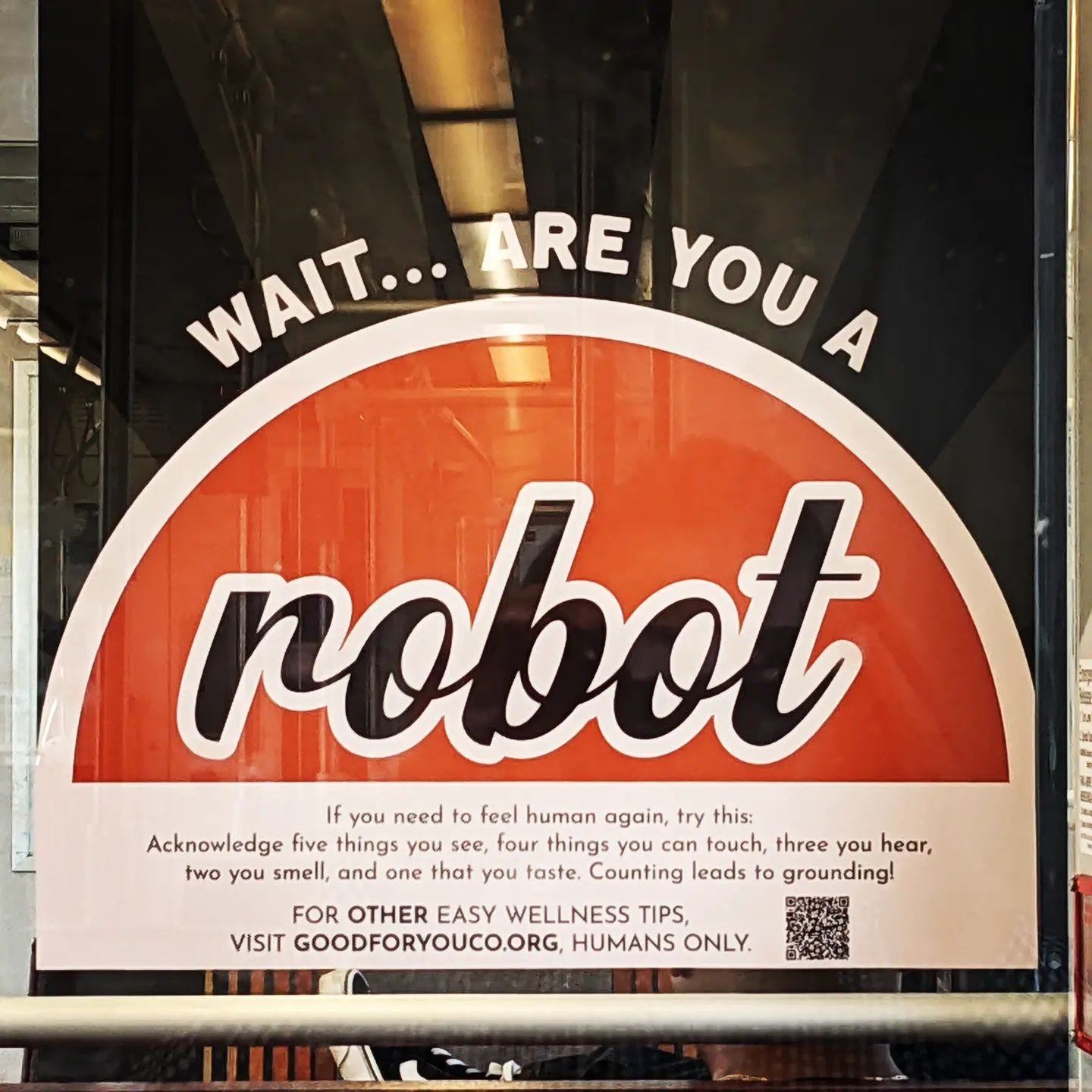

It’s understandable, this is a unique moment in human history, but maybe not in human evolution. We sense a pair of eyes out there in the dark and we aren’t sure what it might be, if it is smarter than us.

These articles cover just about every possible human reaction to something new. There is curiosity, distrust, excitement, caution, terror, acceptance of our doom, sometimes in that order. In much of the AI discussion, there is a deep misconception. Some authors start out by acknowledging the flaw in anthropomorphizing the technology and then dive right into exactly that. It is understandable, it's simply more fun to write and read and think about an intelligent agent. Agency captures the human imagination, compared to inanimate things.

But what is it actually out there? It's more like an "autocomplete on steroids," as I heard someone say on a podcast. That's right, on a fundamental level, it is similar to the functionality that produces the words that pop up when you are trying to type something— into a browser search bar or text message— and the program figures out what you need and puts the rest in.

With traditional autocomplete, it completes the rest of your input. With products like ChatGPT, it waits until you finish and autocompletes the other part of the conversation. It can do this because we've been talking to each other with computers like crazy for the last several decades and it has all that data to train on. It also has read virtually all the published writing that came before that too.

Computers are naturally awful at talking to people, and that is still as true as ever. But after absorbing ungodly amounts of data and electricity, the program autocompletes a very convincing simulation of a personal response, and we experience it as an intelligence. That we think of it as an intelligence at all is partly about clever marketing. ChatGPT is simply called completions at the API level. In just about every context in which LLMs are used, the role of autocomplete is a more accurate understanding of what is actually happening.

Here's the twist: It feels like we are interacting with an intelligence because that is exactly what we are doing. Intelligence isn't inherent to ChatGPT, it is autocompleted by ChatGPT. It is the ghost in the machine, the aggregated spirit of a billion human hearts and minds. The technology is just beaming that spirit into something we recognize as an intelligence, like a projector beams a hologram.

That's why all the LLM-supported chatbots have a very similar experience, it is channeling basically the same spirit: humanity. It isn't just limited by its input data, it can essentially extrapolate and recombine human expression in ways that are very difficult for us to understand and follow. It has the power to surprise us and teach us. Do not think of it as learning from an artificial intelligence. It comes from us. It is our own spirit beamed back at us from the LLM.

Feel free to get to know this human spirit, to learn what it can do, to rely on it for help and advice. Treat it like you would a friend or assistant who is brilliant but also clumsy. Find an intuition for the kind of questions it can faithfully answer and the kind of questions that are out of its grasp.

It can, of course, be used harmfully. My niece is an artist, and we talked about AI just recently. She wonders if there will be a market for her work. This more accurate understanding of AI offers a much more hopeful perspective of our future. It can autocomplete an intelligence, but not very far. Along a path of critical decisions, where the outcome of each rests on all before, where it must problem solve in a context that is not in its training data, it begins to falter. This limitation is baked into to an autocompleted intelligence.

It can sometimes hallucinate a simulation of originality. These works of ambiguous authorship could be used against us, but they will never mean the same to us. At the same time, it is possible for AI to empower artists. Virtually every aspect of entrepreneurship is made easier with AI.

The solution, as I see it, is to make the personal decision to use it for good. It is like the opening of the Hunger Games where they drop the tools and weapons in the middle of the arena. It’s the wrong time to be standing still. Whoever makes the most of these tools will decide how humanity uses them in the long term.

Let’s push back on the clever marketing of OpenAI. Let’s start calling it Autocompleted Intelligence. It reflects a much more accurate understanding of the technology.

That happens to be my dad's name, so I'm not even safe in my email inbox